| Author | Message |

|---|

Arivald Ha'gel

Send message

Joined: 30 Apr 14

Posts: 67

Credit: 160,674,488

RAC: 0

|

Hello everyone,

Is anyone running a 390 or 390X (290 or 290x may have the same problem)

I still have the problem, when i run several WUs at once, then after some time (from minutes to one hour or so) some WUs start to hang, and go on for ever, while one or two crunch on.

I have tested drivers since 15.9, always the same problem, win 7 or win10 does not matter either. Tried different hardware setups, new installations of windows or old ones no difference.

I hope someone can confirm the problem, so we can start searching for the root cause and maybe even a fix.

PS. Running on the 280X or 7970 doesn't give me the error. Also running one WU at a time is fine.

Running 2 Einstein@home WUs at the same time causes calculation error (invalid tasks)

I'm running 4WUs on R280X. No problems at all. I believe this might be strictly 390/390X issue. I might have had such an issue, long, long time ago but it was also happening when I was computing single WUs.

|

|

Mr P Hucker

Send message

Joined: 5 Jul 11

Posts: 993

Credit: 378,328,937

RAC: 8,446

|

Hello everyone,

Is anyone running a 390 or 390X (290 or 290x may have the same problem)

I still have the problem, when i run several WUs at once, then after some time (from minutes to one hour or so) some WUs start to hang, and go on for ever, while one or two crunch on.

I have tested drivers since 15.9, always the same problem, win 7 or win10 does not matter either. Tried different hardware setups, new installations of windows or old ones no difference.

I hope someone can confirm the problem, so we can start searching for the root cause and maybe even a fix.

PS. Running on the 280X or 7970 doesn't give me the error. Also running one WU at a time is fine.

Running 2 Einstein@home WUs at the same time causes calculation error (invalid tasks)

My 290 hangs ALL the WUs when I run 3 or 4 at once for about 5 minutes. My solution is to just run 1 at a time, you don't gain that much anyway.

|

|

Mr P Hucker

Send message

Joined: 5 Jul 11

Posts: 993

Credit: 378,328,937

RAC: 8,446

|

To be fair, it's easier watching multiple things at once with the progress bar resetting. The only reason I see for changing this is for aesthetics, not serving any practical purpose that I'm aware of. Maybe to help avoid some confusion, though on the other hand it might also help illustrate how the WUs are running to people who aren't aware of them being bundled in this way.

I understand some people prefer form over function though... ;)

I'll make do either way.

Cheers, guys.

I prefer function over form actually, and to have a progress bar that moves back and forth is illogical.

It doesn't exactly follow the rules of expectations, if that's what you mean, but I'm not sure that it necessarily should, given that it isn't actually just running one WU, but running several one at a time separately from each other.

But as it's only 1 bar trying to show 5 things, it just makes a mess. When it says 35%, that could be any number of positions.

|

|

Wrend Wrend

Send message

Joined: 4 Nov 12

Posts: 96

Credit: 251,528,484

RAC: 0

|

Discriminating against failed WU or similar does make more sense to me though as that might gunk up the works a bit, even though I might technically fall under this category for the time being. I can assure you that isn't my intent. Hopefully my comments in this thread have helped shed some light on these issues as well.

Pointing fingers doesn't solve the problem. My computer has done a lot of good work for this project, and as mentioned, has been within the top 5 performing hosts in the not too distant past. Off the top of my head, I think it got up to 4th place, but had been within the top 10 for a few months. I hope to get it back up there again after I get these issues sorted out.

Lol. :) I wasn't talking about you at all.

I was talking about (100% or almost 100% invalid or errored tasks):

http://milkyway.cs.rpi.edu/milkyway/results.php?hostid=606779&offset=0&show_names=0&state=5&appid=

(50k invalid tasks in 2 days)

http://milkyway.cs.rpi.edu/milkyway/results.php?hostid=23432&offset=0&show_names=0&state=6&appid=

(2k invalid tasks)

http://milkyway.cs.rpi.edu/milkyway/results.php?hostid=698232&offset=0&show_names=0&state=5&appid=

(3k+ invalid tasks)

http://milkyway.cs.rpi.edu/milkyway/results.php?hostid=586986&offset=0&show_names=0&state=5&appid=

(3k+ invalid tasks)

http://milkyway.cs.rpi.edu/milkyway/results.php?hostid=694659&offset=0&show_names=0&state=5&appid=

(2k+ invalid tasks)

http://milkyway.cs.rpi.edu/milkyway/results.php?hostid=630056&offset=0&show_names=0&state=6&appid=

(almost 2k invalid tasks)

http://milkyway.cs.rpi.edu/milkyway/results.php?hostid=259168&offset=0&show_names=0&state=6&appid=

(almost 2k invalid tasks)

http://milkyway.cs.rpi.edu/milkyway/results.php?hostid=706643&offset=0&show_names=0&state=6&appid=

(almost 2k invalid tasks)

http://milkyway.cs.rpi.edu/milkyway/results.php?hostid=709083&offset=0&show_names=0&state=6&appid=

(almost 1k invalid tasks)

http://milkyway.cs.rpi.edu/milkyway/results.php?hostid=637080&offset=0&show_names=0&state=5&appid=

(500 invalid tasks)

Those Hosts don't really generate ANY credits.

And those are just from 15 of my "can't validate" WUs. So there are many more of those...

My personal favorite is Hosts that have a limit of 10k Tasks per day, and somehow receives more than 20k. After a single day, "Max tasks per day" should drop to 100, unless SOME WUs are correctly returned.

Some time ago I have created a thread:

http://milkyway.cs.rpi.edu/milkyway/forum_thread.php?id=3990

which was "ignored" by the community, so "we" weren't interested in server performance. And I suspect that this time it will be similar.

Bonus points since it was the SAME HOST, that currently gives us 20k invalid results per day.

Apologies. I guess I'm just being a little defensive from the frustration of trying to sort out the issues my PC seems to be having with these new bundled WUs. I do like them overall, though wish they behaved a little better for me.

Best regards.

|

|

Wrend Wrend

Send message

Joined: 4 Nov 12

Posts: 96

Credit: 251,528,484

RAC: 0

|

To be fair, it's easier watching multiple things at once with the progress bar resetting. The only reason I see for changing this is for aesthetics, not serving any practical purpose that I'm aware of. Maybe to help avoid some confusion, though on the other hand it might also help illustrate how the WUs are running to people who aren't aware of them being bundled in this way.

I understand some people prefer form over function though... ;)

I'll make do either way.

Cheers, guys.

I prefer function over form actually, and to have a progress bar that moves back and forth is illogical.

It doesn't exactly follow the rules of expectations, if that's what you mean, but I'm not sure that it necessarily should, given that it isn't actually just running one WU, but running several one at a time separately from each other.

But as it's only 1 bar trying to show 5 things, it just makes a mess. When it says 35%, that could be any number of positions.

I agree that that aspect of it doesn't make much sense if you're tying to see at a glance when the whole WU will end.

|

|

Mr P Hucker

Send message

Joined: 5 Jul 11

Posts: 993

Credit: 378,328,937

RAC: 8,446

|

To be fair, it's easier watching multiple things at once with the progress bar resetting. The only reason I see for changing this is for aesthetics, not serving any practical purpose that I'm aware of. Maybe to help avoid some confusion, though on the other hand it might also help illustrate how the WUs are running to people who aren't aware of them being bundled in this way.

I understand some people prefer form over function though... ;)

I'll make do either way.

Cheers, guys.

I prefer function over form actually, and to have a progress bar that moves back and forth is illogical.

It doesn't exactly follow the rules of expectations, if that's what you mean, but I'm not sure that it necessarily should, given that it isn't actually just running one WU, but running several one at a time separately from each other.

But as it's only 1 bar trying to show 5 things, it just makes a mess. When it says 35%, that could be any number of positions.

I agree that that aspect of it doesn't make much sense if you're tying to see at a glance when the whole WU will end.

It doesn't make sense in any way. If you see it reading 5%, where is it? You have no idea which of the bundle it's working on. If it only passes once, then you know it's a quarter way through the first one.

|

|

Wrend Wrend

Send message

Joined: 4 Nov 12

Posts: 96

Credit: 251,528,484

RAC: 0

|

To be fair, it's easier watching multiple things at once with the progress bar resetting. The only reason I see for changing this is for aesthetics, not serving any practical purpose that I'm aware of. Maybe to help avoid some confusion, though on the other hand it might also help illustrate how the WUs are running to people who aren't aware of them being bundled in this way.

I understand some people prefer form over function though... ;)

I'll make do either way.

Cheers, guys.

I prefer function over form actually, and to have a progress bar that moves back and forth is illogical.

It doesn't exactly follow the rules of expectations, if that's what you mean, but I'm not sure that it necessarily should, given that it isn't actually just running one WU, but running several one at a time separately from each other.

But as it's only 1 bar trying to show 5 things, it just makes a mess. When it says 35%, that could be any number of positions.

I agree that that aspect of it doesn't make much sense if you're tying to see at a glance when the whole WU will end.

It doesn't make sense in any way. If you see it reading 5%, where is it? You have no idea which of the bundle it's working on. If it only passes once, then you know it's a quarter way through the first one.

Yeah, proportionally in total toward being done.

It's easy to see when individual tasks start and stops as it is now though, moving between loading the GPU and CPU, which has been helpful for me in troubleshooting, watching 12 WU progress bars, 12 CPU thread loads, and two GPU loads.

Perhaps it's more useful to know at a glance where the whole bundle is at, I'm just not sure that it actually is though.

Not up to me either way, of course. Just providing some feedback.

|

|

Arivald Ha'gel

Send message

Joined: 30 Apr 14

Posts: 67

Credit: 160,674,488

RAC: 0

|

Next Hosts generating only Errors on opencl_ati_101 tasks:

http://milkyway.cs.rpi.edu/milkyway/results.php?hostid=642157&offset=0&show_names=0&state=6&appid=

http://milkyway.cs.rpi.edu/milkyway/results.php?hostid=539028&offset=0&show_names=0&state=6&appid=

Today's favorite:

http://milkyway.cs.rpi.edu/milkyway/results.php?hostid=587750&offset=0&show_names=0&state=6&appid=

(4k errors on page)

|

|

Chooka Chooka

Send message

Joined: 13 Dec 12

Posts: 101

Credit: 1,782,952,901

RAC: 0

|

Yeah sucks about the 390 cards not being able to run more than 1 Einstein task.

My 280 was great until it died.

I haven't tried M@H yet because I'll have to try & remember how to change the xml file.

|

|

Elvis

Send message

Joined: 9 Feb 11

Posts: 11

Credit: 60,619,139

RAC: 2,912

|

Hello

Just to let you know that all my computed tasks have a "calculation error" status. I don't know why as my graphic card is not overcloked. Does anybody have an idea about that ?

I will suspend Milkyway for a few days/weeks and go for other projects till it's fixed.

|

|

Wrend Wrend

Send message

Joined: 4 Nov 12

Posts: 96

Credit: 251,528,484

RAC: 0

|

Yeah sucks about the 390 cards not being able to run more than 1 Einstein task.

My 280 was great until it died.

I haven't tried M@H yet because I'll have to try & remember how to change the xml file.

The AMD cards do a better job going through the double precision workloads without having to run multiple at the same time compared to the double precision optimized Nvidia cards, where for example, I have to run 6 MW@H 1.43 WU per GPU just to load them up, more if I want to keep them more fully loaded, which I don't.

Here's my config file. (The CPU values are currently set to these since CPU usage is set to 67% in BOINC and I run 6 other CPU tasks from Einstein@Home on the 12 threads of my CPU.)

C:\ProgramData\BOINC\projects\milkyway.cs.rpi.edu_milkyway\app_config.xml

<app_config>

<app>

<name>milkyway</name>

<max_concurrent>0</max_concurrent>

<gpu_versions>

<gpu_usage>0.16</gpu_usage>

<cpu_usage>0.08</cpu_usage>

</gpu_versions>

</app>

<app>

<name>milkyway_nbody</name>

<max_concurrent>0</max_concurrent>

<gpu_versions>

<gpu_usage>0.16</gpu_usage>

<cpu_usage>0.08</cpu_usage>

</gpu_versions>

</app>

<app>

<name>milkyway_separation__modified_fit</name>

<max_concurrent>0</max_concurrent>

<gpu_versions>

<gpu_usage>0.19</gpu_usage>

<cpu_usage>0.08</cpu_usage>

</gpu_versions>

</app>

</app_config>

You'll want to change these values to suit your own needs.

|

|

Michael H.W. Weber Michael H.W. Weber

Send message

Joined: 22 Jan 08

Posts: 29

Credit: 242,764,135

RAC: 1,303

|

Is anyone running a 390 or 390X (290 or 290x may have the same problem)

I still have the problem, when i run several WUs at once, then after some time (from minutes to one hour or so) some WUs start to hang, and go on for ever, while one or two crunch on.

I have tested drivers since 15.9, always the same problem, win 7 or win10 does not matter either. Tried different hardware setups, new installations of windows or old ones no difference.

I hope someone can confirm the problem, so we can start searching for the root cause and maybe even a fix.

The 290X can't run MW tasks in parallel regardless of what driver I use.

By contrast, the 280X can (but I don't use it because I have both a 290X and a 280X in the same machine).

Michael.

President of Rechenkraft.net e.V. - This planet's first and largest distributed computing organization.

|

|

Wrend Wrend

Send message

Joined: 4 Nov 12

Posts: 96

Credit: 251,528,484

RAC: 0

|

The 1.43 work units seem to be running great now on my end (knock on wood). I haven't really changed anything from what I've previously tested recently. I did give the work units some time to settle and disperse, evening out CPU and GPU loads a bit more consistently as they complete at different times.

I'm getting better and more consistent communication with the server now too.

So... Who knows for sure the cause of these issues, but work units seem to be working well for me now at least.

YMMV and best of luck!

|

|

JHMarshall

Send message

Joined: 24 Jul 12

Posts: 40

Credit: 7,123,301,054

RAC: 0

|

Great work! My 7950s,7970s, and R9 280Xs are all cranking away!

Joe

|

|

Mr P Hucker

Send message

Joined: 5 Jul 11

Posts: 993

Credit: 378,328,937

RAC: 8,446

|

I'm getting jealous of people with a higher RAC than me. I'll have to buy an R9 Fury X (the most power per watt you can get). They're selling second hand for a decent price.

|

|

bluestang

Send message

Joined: 13 Oct 16

Posts: 112

Credit: 1,174,293,644

RAC: 0

|

You're better off getting a 7950/280 or 7970/280X. Better DP compute power. Just my 2 cents :)

|

|

Mr P Hucker

Send message

Joined: 5 Jul 11

Posts: 993

Credit: 378,328,937

RAC: 8,446

|

Are you sure? I've compared all the cards and worked out the cost of buying them plus the electricity they use, and an R9 Fury X is the best by far. Everything else uses as much electricity but does less work. The electricity is the main cost, not the card itself.

|

|

Arivald Ha'gel

Send message

Joined: 30 Apr 14

Posts: 67

Credit: 160,674,488

RAC: 0

|

Are you sure? I've compared all the cards and worked out the cost of buying them plus the electricity they use, and an R9 Fury X is the best by far. Everything else uses as much electricity but does less work. The electricity is the main cost, not the card itself.

Radeon Fury X, Double Precision performance 537.6. Watts: 275.

Source: https://en.wikipedia.org/wiki/AMD_Radeon_Rx_300_series

Radeon 280X, Double Precision performance 870.4-1024. Watts: 250.

Source: https://en.wikipedia.org/wiki/AMD_Radeon_Rx_200_series

Winner is clear. For Single Precision, Fury X is better.

|

|

Mr P Hucker

Send message

Joined: 5 Jul 11

Posts: 993

Credit: 378,328,937

RAC: 8,446

|

I run a few projects (Einstein, SETI, Milkyway, and soon Universe if they get GPU working). I've just been going by the floating point performance on GPUboss.com.

Oh and it's for playing games too.

|

|

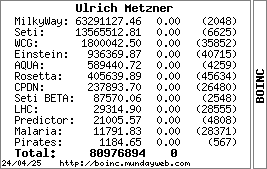

Ulrich Metzner

Send message

Joined: 11 Apr 15

Posts: 58

Credit: 63,291,127

RAC: 0

|

Still waiting for the update for all apps...

Thereby wanting to emphasize the progress barrier, the CPU app runs like 20, 20, 40, 60, 80 -> done. Not like the GPU app, that runs like 20, 40, 60, 80, 100 -> done. That is just cosmetic, but sure, it really sucks (sorry) big time... ;)

Aloha, Uli

|

|

Wrend

Wrend

Wrend

Wrend

Wrend

Wrend

Chooka

Chooka

Wrend

Wrend

Michael H.W. Weber

Michael H.W. Weber

Wrend

Wrend